Memory Systems Refresher

Summary

- Naive Memory Model

- Cache Motivation

- Data Costs

- Memory Hierarchy

- Locality and Cache Blocks

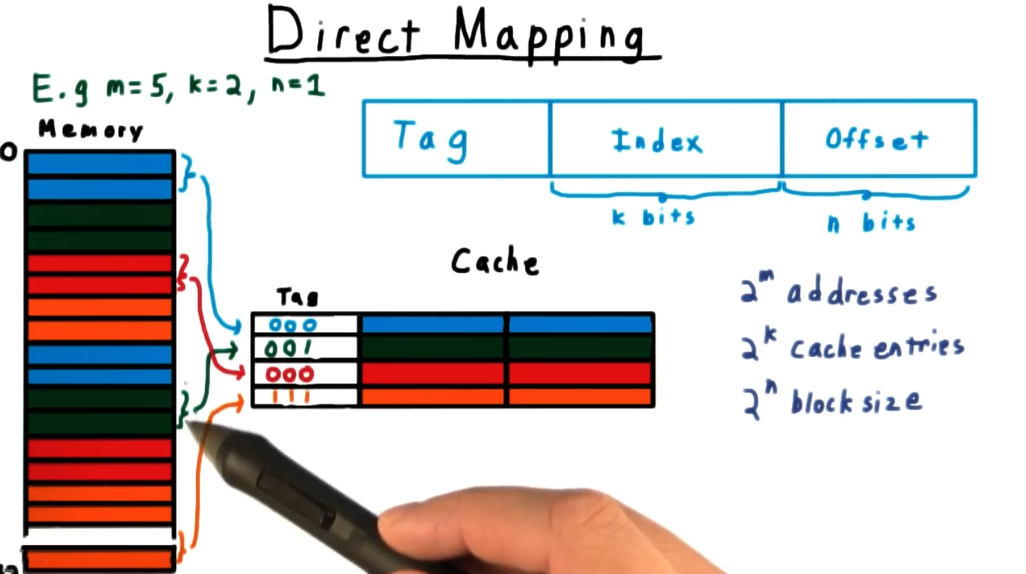

- Direct Mapping

- How many bits

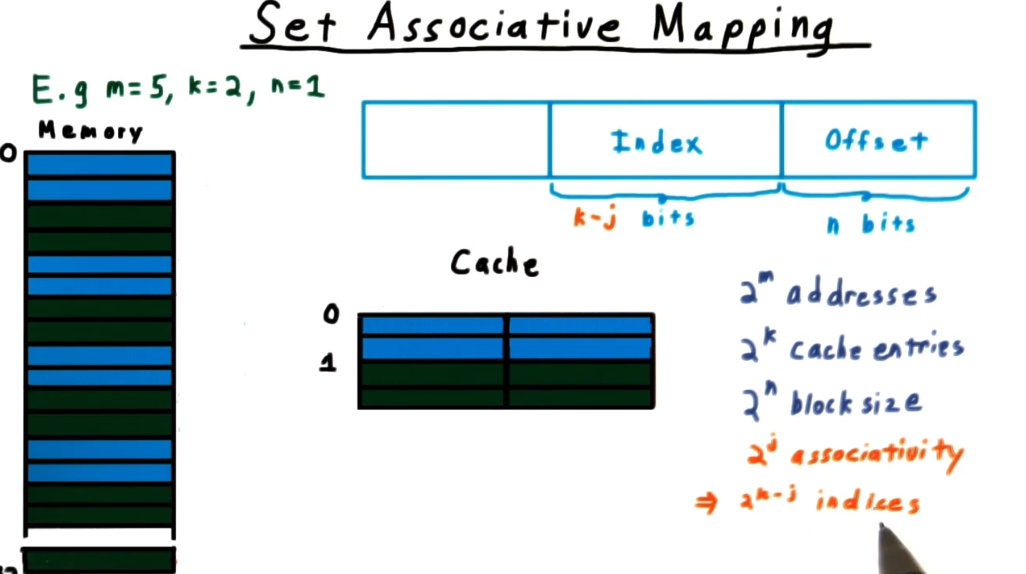

- Set Associative Mapping

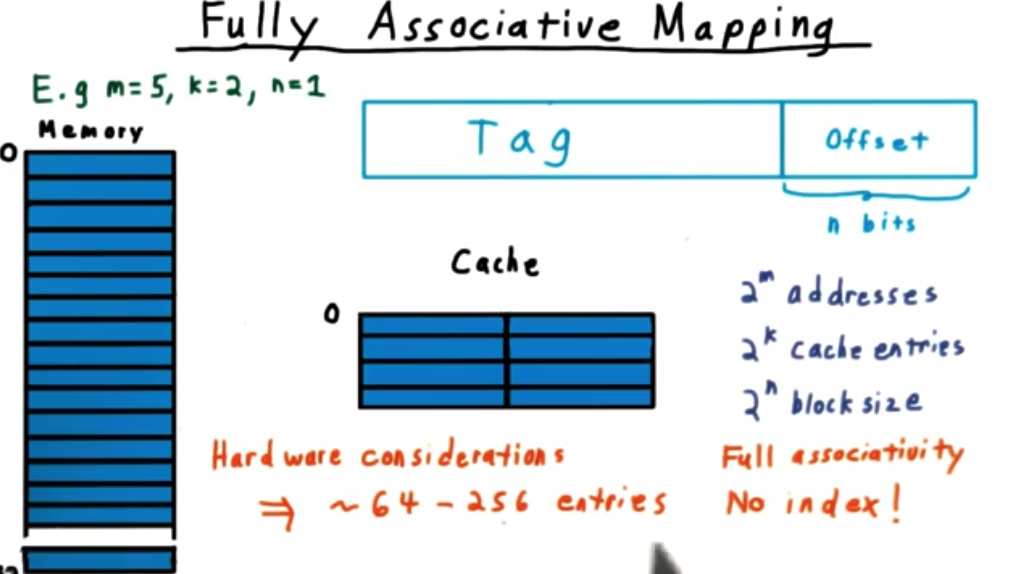

- Fully Associative Mapping

- Write Policy

- Virtual Memory Abstraction

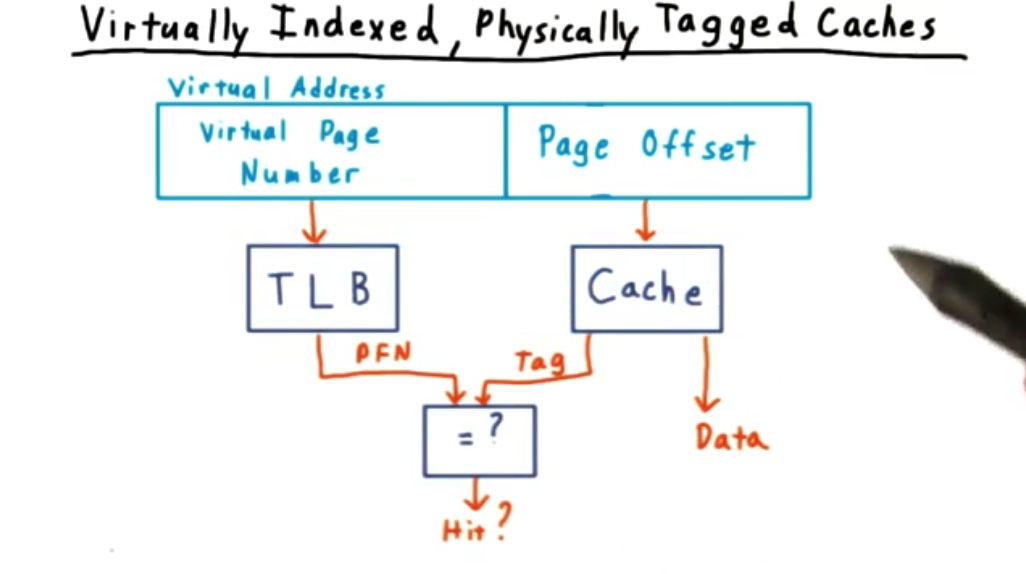

- Virtually Indexed, Physically Tagged Caches

Naive Memory Model

CPU can talk to memory or disk.

Memory faster than disk

Cache Motivation

for (i = 0; i < N; i++)

sum += a[i];

sum is a local variable, in cpu register, fast to access

a[i] may be in main memory, slower to access

- 100 cpu cycle latency

- 2 bytes/cycle

We can put a faster cache, closer to cpu and store a[i] there

- 10 cpu cycle latency

- 256 bytes/cycle

If data is in cache, we call it a “hit”, otherwise its a “miss”

Data Costs

Suppose data retrieving costs

- 4 cycles on a hit

- 100 cycles on a miss

How many cycles does a process with a 99% hit rate spend on avg?

- 4.96

90%?

- 13.6

Memory Hierarchy

There’s always a trade-off between speed and capacity

Locality and Cache Blocks

Locality assumes if a program needs one particular memory address, it will need the nearby addresses soon

Temporal locality refers to the tendency to access the same memory close in

time

- Put data in cache right after you use it, in case you need it again soon

Spatial locality refers to the tendency to access the same memory close in

terms of address

- Put whole block of data in cache, not just the bits you accessed

- Higher order bits of address tell us quickly what block we’re in

Direct Mapping

Suppose a 5 bit address space, fill in the table

| Index | Tag | Index | Offset |

|---|---|---|---|

| 00 | 01 | 0xFC | 0x31 |

| 01 | 10 | 0x12 | 0x82 |

| 10 | 10 | 0x57 | 0x48 |

| 11 | 00 | 0x63 | 0x99 |

| Address | Hit/Miss | Value |

|---|---|---|

| 10001 | 00 | |

| 00111 | 00 | |

| 10100 | 00 | |

| 01111 | 00 |

answer

| Address | Hit/Miss | Value | Reason |

|---|---|---|---|

| 10001 | Miss | ||

| 00111 | Hit | 0x99 | Index of 11, tag of 00, offset of 1 |

| 10100 | Hit | 0x57 | Index of 10, tag of 10, offset of 0 |

| 01111 | Miss |

How many bits

22 bit adress space

512 byte cache

64 byte block size

How many bits are used for the index?

- 3

How many bits are used for the tag?

- 13

Set Associative Mapping

Associating memory addresses with more than one block in the cache.

Ignores most significant bit of index (for 2-way)

Allows a cache block to be stored in two possible places

- Requires checking two tags to see if we hit

- Means less likely to miss

Fully Associative Mapping

Write Policy

Hit:

- write-through

- Write to cache, then main memory

- write-back

- only write to cache

- useful if no other process needs updated version from main memory

Miss:

- write-allocate

- read miss + write hit

- no-write-allocate

- don’t bother with cache

Virtual Memory Abstraction

Key benefit is to trick process into thinking it has a memory space all to itself

Adress Translation

A layer of indirection allows processes to not overwrite variables

Also allows for more virtual memory than phsyical memory, by using disk

Paging

Paging is the granularity to which we grant memory to processes

Consider a 16-bit virtual address space, and 512 byte page size, how many virtual page numbers are there?

bits for offset

Page Table Implementation

Page tables are layered in a hierarchy of page tables, with the top one called the page directory, to save on memory space

Accelerating Address Translation

Table Lookaside Buffer (TLB) is special cache on CPUs to house page table

- Most systems flush TLB on context switch, which is why they are so costly

- Some systems check an address space id to avoid flushing

- Since kernel address space is constant, it’s not flushed

Page Fault

If a process tries to read something that’s not in memory, a page fault happens

- The scheduler sets the process back to the state before the page fault happens

- The OS loads the page into memory

- The scheduler replays the process

Putting it all together

Psuedocode

def load(virtual_addr):

try:

physical_addr = translate_addr(virtual_addr)

except page_fault:

handle_page_fault(virtual_addr)

return load(virtual_addr)

if is_in_cache(physical_addr):

return read_from_cache(phyiscal_addr)

else:

return read_from_memory(physical_addr)

def translate_addr(virtual_addr):

if is_in_tlb(virtual_addr):

return read_from_tlb(virtual_addr)

elif is_access_violation(virtual_addr):

raise access_violation

elif is_present(virtual_addr):

return read_from_page_table(virtual_addr)

else:

raise page_fault

Virtually Indexed, Physically Tagged Caches

Suppose 32-bit virtual and physical address spaces. Pages are 4KB and cache blocks are 64B. What’s the maximum number of entries in a virtually indexed, physically tagged cache?

4k page size => 12 bit page offset

64B block size => 6 bit cache offset

12 - 6 = 6, 2^6 = 64